November 21, 2024

In the future, you may be able to use your smartphone or computer without lifting a finger. That’s because the technology to would allow it, the brain-computer interface (BCI), has advanced rapidly, potentially allowing people to control devices with their minds.

Research into brain-computer interfaces has primarily sought to help people with disabilities, in particular those unable to communicate. That research has resulted in tremendous breakthroughs, like a recent one that allowed a paralyzed man with amyotrophic lateral sclerosis (ALS), a degenerative condition also known as Lou Gehrig’s disease, to speak. Brain-computer interfaces are also used to help amputees control robotic arms.

“Brain-computer interfaces are improving medical outcomes significantly,” said IEEE Senior Member Santhosh Sivasubramani. “They offer new treatment options for various conditions, including helping stroke survivors regain movement and independence. These technologies improve the quality of life for many.”

Foundational Research

Researchers first began exploring the idea of a brain-computer interface in 1929 with the development of electroencephalography (EEG). The ability to measure electrical impulses in the human brain made some wonder whether it would be possible to decode people’s intentions from those electrical signals. In the 1960s, researchers conducted experiments showing that monkeys and cats could be trained to slow their brain rhythms in exchange for food. That line of research led to new questions. If animals could control the electrical impulses in their brains, could those impulses be used to control objects?

By 1988, the answer to that question became yes. Researchers at the Laboratory of Intelligent Machines and Bioinformation Systems in Skopje, Macedonia, used signals from an EEG machine to control a robotic arm. Development was not straightforward, however. It took 11 years for other researchers to replicate the feat.

Components of a BCI

A brain-computer interface needs three main parts. First, it needs a way to pick up brain signals. Second, it has to process or make sense of these signals. Finally, it needs to send the signals to a computer or device, like a robotic arm or a wheelchair, to make something happen.

Observing Brain Signals

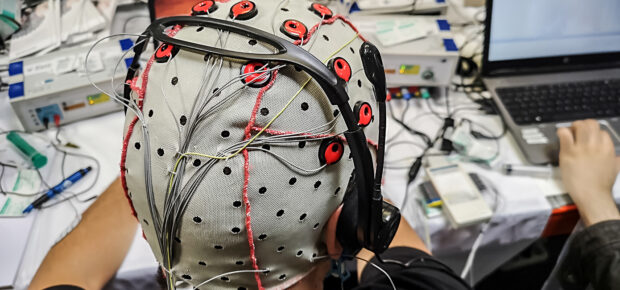

The most prominent form of BCI relies on noninvasive methods to measure electrical activity, usually through sensors placed on the scalp.

The most common type of brain-computer interface (BCI) uses noninvasive methods to measure electrical activity in the brain. This is usually done with sensors placed on the scalp.

Noninvasive methods can’t see deep into the brain, and their signals might not be very strong, which can limit how useful they are. But they are generally safer for patients.

Invasive methods, which involve surgery to implant sensors directly on the brain, have also been developed over the last 20 years. The most well-known invasive device is called the Utah array, which uses 100 electrodes and goes about 1 millimeter into the brain’s outer layer. It has helped drive many important breakthroughs in this field.

Invasive methods carry risks, like infections, because they use a wire that goes through the skull to send data and power. For this reason, they’re not very common. By 2023, only around 50 people had neural implants.

Recently, a third option has appeared: minimally invasive implants. These allow doctors to place electrodes in the brain through a small slit in the skull or even through a vein leading to the brain. This approach strikes a good balance by lowering the medical risks of invasive BCIs while also giving better signal quality than noninvasive ones.

Contributions From Semiconductor Industry

The rapid pace of advancement in brain-computer interfaces relies on progress in other fields, such as the semiconductor industry.

“Semiconductor manufacturing techniques are now being applied to medicine, allowing for the creation of extremely small electrodes,” said IEEE Senior Member Eleanor Watson. “Thin film microelectrode arrays can now lie on the surface of the cortex without penetrating it, offering a less invasive option.”

Other advances include improvements in the way BCIs interpret brain signals with the use of artificial intelligence.

Role of Artificial Intelligence in BCIs

When a brain-computer interface (BCI) measures a signal in the brain, it has to turn that signal into commands to control an external device. However, this process isn’t easy.

One reason is that the brain is usually doing several things at the same time. For example, when the brain sends a signal to move a robotic arm, it might also send signals to blink or breathe.

Artificial intelligence (AI) is increasingly used to help reduce the extra “noise” in brain signals. But AI does more than just cut down noise. It helps pick out important patterns from the raw brain signals, which are essential for understanding what the user wants to do. AI also classifies the user’s intent by analyzing these patterns. Using supervised learning, AI models are trained on brain activity data so they can learn to predict actions accurately.

More Than Therapeutic?

Although the primary focus of brain-computer interface research is on medical applications, there are other sectors where these technologies could have an impact in the near future. But the field is still in its infancy, meaning that the days of playing a video game with your mind remain in the far-off future.

Still, several near-term benefits exist.

“Scientific research stands to benefit greatly, as BCIs provide valuable data about brain function in various states and conditions,” Watson said. “In the field of assistive technology, BCIs could help people with disabilities interact more effectively with devices and their environment. There’s also potential with regard to improved human-computer interaction, where BCIs might offer new ways to control computers or other devices, linking our minds with machines, and perhaps enhancing our cognitive capabilities, our concentration and our mood.

“Despite these potential applications, it’s clear that the current emphasis of BCI research remains firmly on medical and therapeutic uses, with other potential applications being secondary considerations at this stage of development.”

Meaningful Momentum or Running in Place?

Meaningful Momentum or Running in Place? AI Through Our Ages

AI Through Our Ages Liquid Infrastructure: Our Planet's Most Precious Resource

Liquid Infrastructure: Our Planet's Most Precious Resource The Impact of Technology in 2025

The Impact of Technology in 2025 Quantum and AI: Safeguards or Threats to Cybersecurity?

Quantum and AI: Safeguards or Threats to Cybersecurity? Why AI Can't Live Without Us

Why AI Can't Live Without Us Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure

Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure Impact of Technology in 2024

Impact of Technology in 2024 Emerging AI Cybersecurity Challenges and Solutions

Emerging AI Cybersecurity Challenges and Solutions The Skies are Unlimited

The Skies are Unlimited Smart Cities 2030: How Tech is Reshaping Urbanscapes

Smart Cities 2030: How Tech is Reshaping Urbanscapes Impact of Technology 2023

Impact of Technology 2023 Cybersecurity for Life-Changing Innovations

Cybersecurity for Life-Changing Innovations Smarter Wearables Healthier Life

Smarter Wearables Healthier Life Infrastructure In Motion

Infrastructure In Motion The Impact of Tech in 2022 and Beyond

The Impact of Tech in 2022 and Beyond Cybersecurity, Technology and Protecting Our World

Cybersecurity, Technology and Protecting Our World How Technology Helps us Understand Our Health and Wellness

How Technology Helps us Understand Our Health and Wellness The Resilience of Humanity

The Resilience of Humanity Harnessing and Sustaining our Natural Resources

Harnessing and Sustaining our Natural Resources Creating Healthy Spaces Through Technology

Creating Healthy Spaces Through Technology Exceptional Infrastructure Challenges, Technology and Humanity

Exceptional Infrastructure Challenges, Technology and Humanity The Global Impact of IEEE's 802 Standards

The Global Impact of IEEE's 802 Standards Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us

Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids

How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids Space Exploration, Technology and Our Lives

Space Exploration, Technology and Our Lives Global Innovation and the Environment

Global Innovation and the Environment How Technology, Privacy and Security are Changing Each Other (And Us)

How Technology, Privacy and Security are Changing Each Other (And Us) Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk

Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk Virtual and Mixed Reality

Virtual and Mixed Reality How Robots are Improving our Health

How Robots are Improving our Health IEEE Experts and the Robots They are Teaching

IEEE Experts and the Robots They are Teaching See how millennial parents around the world see AI impacting the lives of their tech-infused offspring

See how millennial parents around the world see AI impacting the lives of their tech-infused offspring Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production

Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production Watch technical experts discuss the latest cyber threats

Watch technical experts discuss the latest cyber threats Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies

Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies Follow the timeline to see how Generation AI will be impacted by technology

Follow the timeline to see how Generation AI will be impacted by technology Learn how your IoT data can be used by experiencing a day in a connected life

Learn how your IoT data can be used by experiencing a day in a connected life Listen to technical experts discuss the biggest security threats today

Listen to technical experts discuss the biggest security threats today See how tech has influenced and evolved with the Games

See how tech has influenced and evolved with the Games Enter our virtual home to explore the IoT (Internet of Things) technologies

Enter our virtual home to explore the IoT (Internet of Things) technologies Explore an interactive map showcasing exciting innovations in robotics

Explore an interactive map showcasing exciting innovations in robotics Interactively explore A.I. in recent Hollywood movies

Interactively explore A.I. in recent Hollywood movies Get immersed in technologies that will improve patients' lives

Get immersed in technologies that will improve patients' lives