September 29, 2017

Ayanna Howard: IEEE Senior Member, Professor and Linda J. and Mark C. Smith Endowed Chair in Bioengineering in the School of Electrical and Computer Engineering at the Georgia Institute of Technology

Ayanna Howard: IEEE Senior Member, Professor and Linda J. and Mark C. Smith Endowed Chair in Bioengineering in the School of Electrical and Computer Engineering at the Georgia Institute of Technology

IEEE Transmitter: What is your area of robotics expertise?

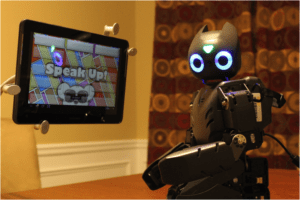

Ayanna: The area that I focus on now, and where my expertise lies, is in the domain of healthcare robotics. Currently, I am working on developing a robot coach, which offers in-home therapy for children with disabilities, such as cerebral palsy. The main robots I’ve worked with in this domain are humanoid robots, one being a small robot called Darwin.

IEEE Transmitter: Why is the area of robotics with which you are involved important? How is it benefitting society today, and how will it benefit society in the future?

Ayanna: Statistically, in countries with life expectancies over 70 years of age, individuals will spend approximately eight years of their life living with a disability. That’s a staggeringly high number of individuals that are affected, when you think about it. On top of that, living with a disability, like a motor or vision impairment for example, impacts how you perform your day-to day-activities. Robots that can assist in the healthcare space address the shortage of available human resources and a need for a technology that can enhance the quality of life for individuals with disabilities. The work I’m doing is trying to address this need. Hopefully, it will help to impact lives positively and will only continue to enhance people’s lives more as the technology matures.

Transmitter: What are the functions performed by the robots you are working with? Why is this special?

Ayanna: The robots I work with are designed to address the needs of children with disabilities and they interact with those children in two ways. First, it interacts as a “coach.”“Corrective” coaching, as we call it, allows the robot to monitor what the child is doing and provide guidance as to what the child should or shouldn’t be doing to enhance their outcomes. For example, if the child has a disability that impacts their fine motor skills, the robot can monitor that and provide coaching as to what the best exercises would be for the child to increase his or her range of motion.

The second way the robots interact with children is motivational. Children, irrespective of their abilities, learn best when they receive positive reinforcement. For example, by placing a robot within an inclusive classroom, the robot can help monitor for when the child is doing something positive and provide encouragement to the child.

Both of these functions are special because the robots are matching the processes of how kids learn. The corrective coaching and motivational approach allows for the robots, and even the children, to learn new skill sets that they can then take and apply to other situations.

Transmitter: How long is the “learning process” in your area of robotics? How difficult is it for your robot to learn? What challenges did you face in teaching your robot?

Ayanna: There are so many components of a robotic system, but if I had to put a time on the entire learning process to get to a functional prototype, I’d say about a year. It only takes about two months to program the robot with a basic set of behaviors, but for that robot to really be fully functioning, where it’s learning from its environment and applying its new learning to other situations, that is a longer process. The challenge, specifically when it comes to children, is learning and figuring out how you integrate and customize the different interactions that are needed to personalize the interaction with each child. The fundamental algorithms aren’t the complicated part. It’s the robustness, integration of the behaviors, identifying the correct inputs and outputs — and getting the system to function in the real world — that’s the complicated part.

Transmitter: We understand robots are starting to learn on their own. How is technology enabling robots to learn on their own?

Ayanna: When it comes to how robots learn today, it is very application-specific. Robots can learn a lot on their own when the inputs and outputs that are collected are focused on one task or one application. The methods used can thus be optimized for the hardware, the environment, and the task. Interestingly enough, technologies such as cloud computing are starting to enable the collection and processing of larger amounts of data. Because of that, we should eventually be capable of, not only learning more, but of sharing the learning across robot platforms.

Transmitter: How do you envision robots learning in the next 5-10 years?

Ayanna: So, let’s say you have a mobile manipulator robot in your home whose function is to pick up toys off the floor. You may ask yourself, why can’t this same robot also unload the dishwasher? In the future, I see robots as actually learning what they don’t know and then independently finding a way to learn that new function in real-time. They could do this by learning from other robots with the same form factor and asking themselves “How do I incorporate this new task and still maintain my core tasks?” So, the robot that only knows how to grab toys off the floors could then learn from another robot as to how to also unload the dishwasher. It’s not generalized learning but can be thought of as iterative learning.

Liquid Infrastructure: Our Planet's Most Precious Resource

Liquid Infrastructure: Our Planet's Most Precious Resource The Impact of Technology in 2025

The Impact of Technology in 2025 Quantum and AI: Safeguards or Threats to Cybersecurity?

Quantum and AI: Safeguards or Threats to Cybersecurity? Why AI Can't Live Without Us

Why AI Can't Live Without Us Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure

Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure Impact of Technology in 2024

Impact of Technology in 2024 Emerging AI Cybersecurity Challenges and Solutions

Emerging AI Cybersecurity Challenges and Solutions The Skies are Unlimited

The Skies are Unlimited Smart Cities 2030: How Tech is Reshaping Urbanscapes

Smart Cities 2030: How Tech is Reshaping Urbanscapes Impact of Technology 2023

Impact of Technology 2023 Cybersecurity for Life-Changing Innovations

Cybersecurity for Life-Changing Innovations Smarter Wearables Healthier Life

Smarter Wearables Healthier Life Infrastructure In Motion

Infrastructure In Motion The Impact of Tech in 2022 and Beyond

The Impact of Tech in 2022 and Beyond Cybersecurity, Technology and Protecting Our World

Cybersecurity, Technology and Protecting Our World How Technology Helps us Understand Our Health and Wellness

How Technology Helps us Understand Our Health and Wellness The Resilience of Humanity

The Resilience of Humanity Harnessing and Sustaining our Natural Resources

Harnessing and Sustaining our Natural Resources Creating Healthy Spaces Through Technology

Creating Healthy Spaces Through Technology Exceptional Infrastructure Challenges, Technology and Humanity

Exceptional Infrastructure Challenges, Technology and Humanity The Global Impact of IEEE's 802 Standards

The Global Impact of IEEE's 802 Standards Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us

Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids

How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids Space Exploration, Technology and Our Lives

Space Exploration, Technology and Our Lives Global Innovation and the Environment

Global Innovation and the Environment How Technology, Privacy and Security are Changing Each Other (And Us)

How Technology, Privacy and Security are Changing Each Other (And Us) Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk

Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk Virtual and Mixed Reality

Virtual and Mixed Reality How Robots are Improving our Health

How Robots are Improving our Health IEEE Experts and the Robots They are Teaching

IEEE Experts and the Robots They are Teaching See how millennial parents around the world see AI impacting the lives of their tech-infused offspring

See how millennial parents around the world see AI impacting the lives of their tech-infused offspring Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production

Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production Watch technical experts discuss the latest cyber threats

Watch technical experts discuss the latest cyber threats Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies

Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies Follow the timeline to see how Generation AI will be impacted by technology

Follow the timeline to see how Generation AI will be impacted by technology Learn how your IoT data can be used by experiencing a day in a connected life

Learn how your IoT data can be used by experiencing a day in a connected life Listen to technical experts discuss the biggest security threats today

Listen to technical experts discuss the biggest security threats today See how tech has influenced and evolved with the Games

See how tech has influenced and evolved with the Games Enter our virtual home to explore the IoT (Internet of Things) technologies

Enter our virtual home to explore the IoT (Internet of Things) technologies Explore an interactive map showcasing exciting innovations in robotics

Explore an interactive map showcasing exciting innovations in robotics Interactively explore A.I. in recent Hollywood movies

Interactively explore A.I. in recent Hollywood movies Get immersed in technologies that will improve patients' lives

Get immersed in technologies that will improve patients' lives