July 25, 2024

In the first six months of 2024 alone, over 300 articles focused on creating detection tools for deepfakes have been published in the IEEE Xplore digital library.

“While AI is enabling deepfake algorithms to produce harder-to-detect fakes, AI-enabled detection technologies are keeping pace by employing diverse techniques and algorithms to identify fakes,” said IEEE Senior Member Aiyappan Pillai.

What Are Deepfakes?

The term deepfake is a mix of two terms: deep learning and fake, as in forgery. Deepfakes are AI-generated realistic videos, audio clips or still images that depict real people doing or saying things they didn’t do or say. They’ve appeared in political realms in recent years. They’ve also impacted the world of entertainment. Deepfakes threaten to disrupt the entertainment economy through songs that imitate popular musical acts, usually without the performers’ consent.

Emerging Detection Methods

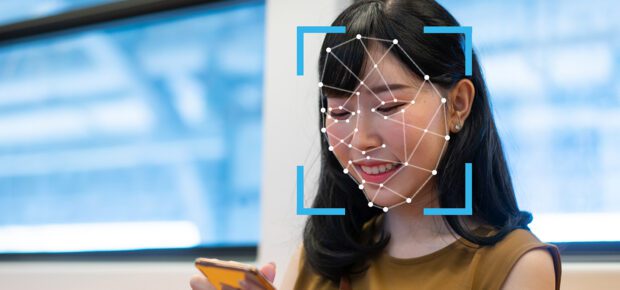

Two broad categories of technology exist for spotting deepfakes, and significant research has been devoted to determining how well they work.

Machine Learning: One method for identifying deepfakes involves feeding a machine learning model lots and lots of deepfakes and real content so it can learn to spot the differences between them. These techniques may not involve machine vision at all. Rather, they convert the image into data and learn from its patterns. One challenge with this method may have trouble identifying a new deepfake if it differs significantly from the data it was trained on.

Semantic Analysis: In contrast to machine learning methods, which rely on raw data, semantic analysis looks at the content and context of the image using the same machine vision techniques that help artificial intelligence systems recognize apples or books in a picture. These methods can analyze the pattern of blood flow in a speaker’s face, the shape of their head or whether their appearance is consistent over time. Semantic analysis also covers relationships between objects that don’t make sense. For example, imagine an architectural rendering of a bathroom. An AI-generated image may place a shower head in a location where it cannot be used functionally.

Watermarking

The need to identify deepfakes has led some generative AI companies to create markings for this purpose. In some cases, these markings are visible to users; in others, they are not.

“One of the most effective techniques is digital watermarking of the images generated using the generative AI platforms,” said Rahul Vishwakarma, IEEE Senior Member.

Questions of Bias

About five or six commonly used data sets — videos and images of people — are used to train deep learning models to detect deepfakes. One dataset consists entirely of celebrities. One challenge researchers have is that the people featured in these data sets are more likely to be white and male. That has led to questions over whether deepfake detection tools may have a harder time when faced with data from people from diverse backgrounds.

Are Humans Any Better?

While deepfakes are realistic, humans can spot them. One recent study published in IEEE Privacy & Security pitted humans against machines. Researchers found that humans were, on average, able to identify about 71% of deepfakes, while cutting-edge detection methods identified 93%.

However, some deepfake images fooled the detection algorithms, while humans were able to spot the hoax.

Some people are much better at spotting deepfakes than others, but researchers are only beginning to research why. In another study, researchers examined how well police officers and “super-recognizers” could detect deepfakes. Super-recognizers, whose abilities are certified by a lab, are people who are really good at recognizing and identifying faces. The study showed that super-recognizers were no better at spotting deepfakes than ordinary people. This suggests that being able to tell if something is a deepfake differs from being good at recognizing faces.

Learn More: To see a presentation on cutting-edge deepfake detection methods, check out this video from the IEEE Signal Processing Society.

Meaningful Momentum or Running in Place?

Meaningful Momentum or Running in Place? AI Through Our Ages

AI Through Our Ages Liquid Infrastructure: Our Planet's Most Precious Resource

Liquid Infrastructure: Our Planet's Most Precious Resource The Impact of Technology in 2025

The Impact of Technology in 2025 Quantum and AI: Safeguards or Threats to Cybersecurity?

Quantum and AI: Safeguards or Threats to Cybersecurity? Why AI Can't Live Without Us

Why AI Can't Live Without Us Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure

Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure Impact of Technology in 2024

Impact of Technology in 2024 Emerging AI Cybersecurity Challenges and Solutions

Emerging AI Cybersecurity Challenges and Solutions The Skies are Unlimited

The Skies are Unlimited Smart Cities 2030: How Tech is Reshaping Urbanscapes

Smart Cities 2030: How Tech is Reshaping Urbanscapes Impact of Technology 2023

Impact of Technology 2023 Cybersecurity for Life-Changing Innovations

Cybersecurity for Life-Changing Innovations Smarter Wearables Healthier Life

Smarter Wearables Healthier Life Infrastructure In Motion

Infrastructure In Motion The Impact of Tech in 2022 and Beyond

The Impact of Tech in 2022 and Beyond Cybersecurity, Technology and Protecting Our World

Cybersecurity, Technology and Protecting Our World How Technology Helps us Understand Our Health and Wellness

How Technology Helps us Understand Our Health and Wellness The Resilience of Humanity

The Resilience of Humanity Harnessing and Sustaining our Natural Resources

Harnessing and Sustaining our Natural Resources Creating Healthy Spaces Through Technology

Creating Healthy Spaces Through Technology Exceptional Infrastructure Challenges, Technology and Humanity

Exceptional Infrastructure Challenges, Technology and Humanity The Global Impact of IEEE's 802 Standards

The Global Impact of IEEE's 802 Standards Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us

Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids

How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids Space Exploration, Technology and Our Lives

Space Exploration, Technology and Our Lives Global Innovation and the Environment

Global Innovation and the Environment How Technology, Privacy and Security are Changing Each Other (And Us)

How Technology, Privacy and Security are Changing Each Other (And Us) Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk

Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk Virtual and Mixed Reality

Virtual and Mixed Reality How Robots are Improving our Health

How Robots are Improving our Health IEEE Experts and the Robots They are Teaching

IEEE Experts and the Robots They are Teaching See how millennial parents around the world see AI impacting the lives of their tech-infused offspring

See how millennial parents around the world see AI impacting the lives of their tech-infused offspring Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production

Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production Watch technical experts discuss the latest cyber threats

Watch technical experts discuss the latest cyber threats Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies

Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies Follow the timeline to see how Generation AI will be impacted by technology

Follow the timeline to see how Generation AI will be impacted by technology Learn how your IoT data can be used by experiencing a day in a connected life

Learn how your IoT data can be used by experiencing a day in a connected life Listen to technical experts discuss the biggest security threats today

Listen to technical experts discuss the biggest security threats today See how tech has influenced and evolved with the Games

See how tech has influenced and evolved with the Games Enter our virtual home to explore the IoT (Internet of Things) technologies

Enter our virtual home to explore the IoT (Internet of Things) technologies Explore an interactive map showcasing exciting innovations in robotics

Explore an interactive map showcasing exciting innovations in robotics Interactively explore A.I. in recent Hollywood movies

Interactively explore A.I. in recent Hollywood movies Get immersed in technologies that will improve patients' lives

Get immersed in technologies that will improve patients' lives