December 19, 2024

Can Large Language Models — those intelligent chatbots that produce human-like answers to our prompts — influence our opinions?

An experiment described in the magazine IEEE Intelligent Systems suggests that the answer is yes. The implications of that research have ramifications for teachers grading papers, employee evaluations and many other situations that could affect our lives.

The Research

The study’s design focuses on the differing opinions offered by two prominent LLMs. Each of these LLMs was tasked with evaluating two different patent abstracts on a scale from 1 to 10, focusing on qualities such as feasibility and disruptiveness.

The study’s author provided the patent abstracts and the LLM-generated scores to different groups of graduate students. Each group saw only one rating — either the higher or the lower one. Unaware of what other groups had been given, the students were then asked to rate the patent abstracts themselves.

Groups that saw a higher LLM rating (like a “9”) gave higher evaluations than groups that saw a lower rating (like a “4”). However, they did not just copy the scores. Instead, those shown a “9” gave an average rating of about 7.5, while those shown a “4” gave an average rating slightly above 5. This suggests that although the LLM’s rating influenced them, the participants still made their own judgments.

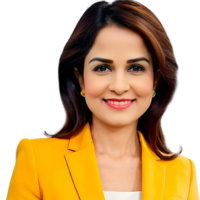

“The experiment results suggest that AI tools can affect decision-making tasks, like when teachers grade student research papers or when enterprises evaluate employees, products, software or other intellectual objects,” said IEEE Senior Member Ayesha Iqbal. “If different AI tools give different ratings, and people depend on them, people can give different ratings to the same idea. That raises an important question: Do we want to be biased toward its recommendations?”

When Should We Use AI to Help Form Judgments?

It’s fairly common for professionals to use LLMs to assist with the first draft of tasks like grading papers or evaluating projects. Professionals may not use the LLMs output as a final product, but they provide a useful and time-saving starting point. Given the anchoring effect described in the study, is it a good idea?

The research suggests that, like people, LLMs offer reasons for or against certain ideas. Relying on an LLM might be akin to collaborating with a peer. At the same time, the LLMs tended to have features that could make them more or less useful. Some LLMs tend to be more optimistic and offer longer answers. Others can be more pessimistic and offer shorter answers.

The study’s author indicates that educators might use just one LLM when doing something like grading papers to maintain consistency but might use multiple LLMs for more complex tasks, like evaluating projects in business.

“It is important to establish boundaries and limitations for AI use in our personal and professional lives,” Iqbal said. “We need to determine when and where AI technology is appropriate and beneficial and identify situations where human judgment and intervention are necessary. Overreliance on AI can be avoided by maintaining control over technology usage and decision-making processes.”

MEANINGFUL MOMENTUM OR RUNNING IN PLACE?

MEANINGFUL MOMENTUM OR RUNNING IN PLACE? AI Through Our Ages

AI Through Our Ages Liquid Infrastructure: Our Planet's Most Precious Resource

Liquid Infrastructure: Our Planet's Most Precious Resource The Impact of Technology in 2025

The Impact of Technology in 2025 Quantum and AI: Safeguards or Threats to Cybersecurity?

Quantum and AI: Safeguards or Threats to Cybersecurity? Why AI Can't Live Without Us

Why AI Can't Live Without Us Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure

Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure Impact of Technology in 2024

Impact of Technology in 2024 Emerging AI Cybersecurity Challenges and Solutions

Emerging AI Cybersecurity Challenges and Solutions The Skies are Unlimited

The Skies are Unlimited Smart Cities 2030: How Tech is Reshaping Urbanscapes

Smart Cities 2030: How Tech is Reshaping Urbanscapes Impact of Technology 2023

Impact of Technology 2023 Cybersecurity for Life-Changing Innovations

Cybersecurity for Life-Changing Innovations Smarter Wearables Healthier Life

Smarter Wearables Healthier Life Infrastructure In Motion

Infrastructure In Motion The Impact of Tech in 2022 and Beyond

The Impact of Tech in 2022 and Beyond Cybersecurity, Technology and Protecting Our World

Cybersecurity, Technology and Protecting Our World How Technology Helps us Understand Our Health and Wellness

How Technology Helps us Understand Our Health and Wellness The Resilience of Humanity

The Resilience of Humanity Harnessing and Sustaining our Natural Resources

Harnessing and Sustaining our Natural Resources Creating Healthy Spaces Through Technology

Creating Healthy Spaces Through Technology Exceptional Infrastructure Challenges, Technology and Humanity

Exceptional Infrastructure Challenges, Technology and Humanity The Global Impact of IEEE's 802 Standards

The Global Impact of IEEE's 802 Standards Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us

Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids

How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids Space Exploration, Technology and Our Lives

Space Exploration, Technology and Our Lives Global Innovation and the Environment

Global Innovation and the Environment How Technology, Privacy and Security are Changing Each Other (And Us)

How Technology, Privacy and Security are Changing Each Other (And Us) Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk

Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk Virtual and Mixed Reality

Virtual and Mixed Reality How Robots are Improving our Health

How Robots are Improving our Health IEEE Experts and the Robots They are Teaching

IEEE Experts and the Robots They are Teaching See how millennial parents around the world see AI impacting the lives of their tech-infused offspring

See how millennial parents around the world see AI impacting the lives of their tech-infused offspring Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production

Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production Watch technical experts discuss the latest cyber threats

Watch technical experts discuss the latest cyber threats Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies

Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies Follow the timeline to see how Generation AI will be impacted by technology

Follow the timeline to see how Generation AI will be impacted by technology Learn how your IoT data can be used by experiencing a day in a connected life

Learn how your IoT data can be used by experiencing a day in a connected life Listen to technical experts discuss the biggest security threats today

Listen to technical experts discuss the biggest security threats today See how tech has influenced and evolved with the Games

See how tech has influenced and evolved with the Games Enter our virtual home to explore the IoT (Internet of Things) technologies

Enter our virtual home to explore the IoT (Internet of Things) technologies Explore an interactive map showcasing exciting innovations in robotics

Explore an interactive map showcasing exciting innovations in robotics Interactively explore A.I. in recent Hollywood movies

Interactively explore A.I. in recent Hollywood movies Get immersed in technologies that will improve patients' lives

Get immersed in technologies that will improve patients' lives