October 24, 2024

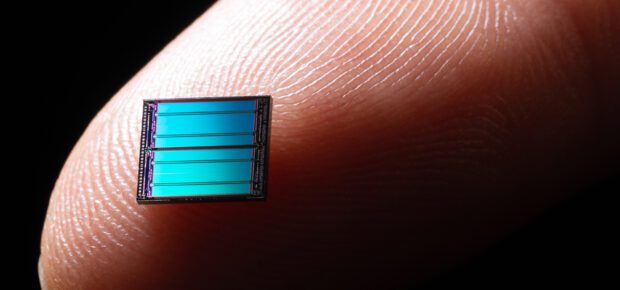

You’ve probably heard of large language models, the AI systems that generate human-like text. But what about small language models? Researchers are pushing for smaller, more compact AI systems to solve a range of challenges posed by the growing hunger that AI systems have for data. And it’s not just the generative AI models in the news today that need to become smaller, but the AI systems that could operate industrial facilities, smart cities or autonomous vehicles.

The Challenge of Big AI Models

When you use artificial intelligence, whether on your phone or laptop, most of the actual computing occurs in a data center. That’s because the most popular AI models are computationally expensive — your laptop probably doesn’t have enough computing power to run the query. The AI systems also use significant amounts of energy. It’s said that a single query in a generative AI model — asking a question like “How does generative AI work?” — consumes as much electricity as a lightbulb illuminated for one hour.

That presents two challenges for the use of AI. First, it raises concerns about the sustainability of artificial intelligence because the electricity that powers AI also increases greenhouse gas emissions.

In “The Impact of Technology in 2025 and Beyond: an IEEE Global Study,” a recent survey of global technology leaders, 35% said the usefulness of AI greatly outweighs its energy consumption, while 34% said that energy consumption and usefulness of AI are in good balance. About one-fifth (21%) perceived the benefits of AI to be significant, but the high amount of energy used is still a concern, while 8% perceived the massive amount of energy used outweighs the benefits of AI.

Second, it means that anything that relies on artificial intelligence either needs more power to operate or connectivity to a data center.

Cutting-edge techniques to shrink AI are having some success.

“They (techniques) use substantially less power, often operating in the range of watts rather than the kilowatts or megawatts consumed by large data center systems,” said IEEE Member Jay Shah.

Who Needs Compact AI?

Smaller, more energy efficient AI systems could be used in a diverse range of applications, like autonomous vehicles.

“Next-generation, low-power AI accelerators are crucial for the future of autonomous vehicles in terms of long-term reliability and reduced power consumption,” Shah said. “They could enable real-time decision-making and more compact designs. “

They’d be a boon to robotics systems, because they would lower power requirements for robots.

IEEE Senior Member Cristiane Agra Pimentel said compact AI systems would also be useful in industrial settings where smaller controls could automate plant processes.

“The use of compact AI in the industrial area will be increasingly applicable to machine operation controls, product traceability controls and supply chain systems management,” Pimentel said.

Small AI Has Tradeoffs

Large language models are often suited for diverse purposes. They can assist in writing college essays and help you build a website. Compact systems could be optimized for specific systems. They could be designed to work as chatbots for a company or autocomplete computer code.

Additionally, compact AI systems are, for now, less accurate because they often use less data.

“These trade-offs are often acceptable,” Shah said, “given the benefits of lower power consumption, faster inference times and the ability to run AI on edge devices. Researchers and developers continue to work on improving the accuracy of compact AI systems while maintaining their efficiency advantages.”

Learn More: The IEEE Computer Society recently published an in-depth article on the benefits, and challenges of small language models. Check it out.

Meaningful Momentum or Running in Place?

Meaningful Momentum or Running in Place? AI Through Our Ages

AI Through Our Ages Liquid Infrastructure: Our Planet's Most Precious Resource

Liquid Infrastructure: Our Planet's Most Precious Resource The Impact of Technology in 2025

The Impact of Technology in 2025 Quantum and AI: Safeguards or Threats to Cybersecurity?

Quantum and AI: Safeguards or Threats to Cybersecurity? Why AI Can't Live Without Us

Why AI Can't Live Without Us Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure

Bits, Bytes, Buildings and Bridges: Digital-Driven Infrastructure Impact of Technology in 2024

Impact of Technology in 2024 Emerging AI Cybersecurity Challenges and Solutions

Emerging AI Cybersecurity Challenges and Solutions The Skies are Unlimited

The Skies are Unlimited Smart Cities 2030: How Tech is Reshaping Urbanscapes

Smart Cities 2030: How Tech is Reshaping Urbanscapes Impact of Technology 2023

Impact of Technology 2023 Cybersecurity for Life-Changing Innovations

Cybersecurity for Life-Changing Innovations Smarter Wearables Healthier Life

Smarter Wearables Healthier Life Infrastructure In Motion

Infrastructure In Motion The Impact of Tech in 2022 and Beyond

The Impact of Tech in 2022 and Beyond Cybersecurity, Technology and Protecting Our World

Cybersecurity, Technology and Protecting Our World How Technology Helps us Understand Our Health and Wellness

How Technology Helps us Understand Our Health and Wellness The Resilience of Humanity

The Resilience of Humanity Harnessing and Sustaining our Natural Resources

Harnessing and Sustaining our Natural Resources Creating Healthy Spaces Through Technology

Creating Healthy Spaces Through Technology Exceptional Infrastructure Challenges, Technology and Humanity

Exceptional Infrastructure Challenges, Technology and Humanity The Global Impact of IEEE's 802 Standards

The Global Impact of IEEE's 802 Standards Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us

Scenes of our Cyber Lives: The Security Threats and Technology Solutions Protecting Us How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids

How Millennial Parents are Embracing Health and Wellness Technologies for Their Generation Alpha Kids Space Exploration, Technology and Our Lives

Space Exploration, Technology and Our Lives Global Innovation and the Environment

Global Innovation and the Environment How Technology, Privacy and Security are Changing Each Other (And Us)

How Technology, Privacy and Security are Changing Each Other (And Us) Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk

Find us in booth 31506, LVCC South Hall 3 and experience the Technology Moon Walk Virtual and Mixed Reality

Virtual and Mixed Reality How Robots are Improving our Health

How Robots are Improving our Health IEEE Experts and the Robots They are Teaching

IEEE Experts and the Robots They are Teaching See how millennial parents around the world see AI impacting the lives of their tech-infused offspring

See how millennial parents around the world see AI impacting the lives of their tech-infused offspring Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production

Take the journey from farm to table and learn how IoT will help us reach the rising demand for food production Watch technical experts discuss the latest cyber threats

Watch technical experts discuss the latest cyber threats Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies

Explore how researchers, teachers, explorers, healthcare and medical professionals use immersive technologies Follow the timeline to see how Generation AI will be impacted by technology

Follow the timeline to see how Generation AI will be impacted by technology Learn how your IoT data can be used by experiencing a day in a connected life

Learn how your IoT data can be used by experiencing a day in a connected life Listen to technical experts discuss the biggest security threats today

Listen to technical experts discuss the biggest security threats today See how tech has influenced and evolved with the Games

See how tech has influenced and evolved with the Games Enter our virtual home to explore the IoT (Internet of Things) technologies

Enter our virtual home to explore the IoT (Internet of Things) technologies Explore an interactive map showcasing exciting innovations in robotics

Explore an interactive map showcasing exciting innovations in robotics Interactively explore A.I. in recent Hollywood movies

Interactively explore A.I. in recent Hollywood movies Get immersed in technologies that will improve patients' lives

Get immersed in technologies that will improve patients' lives